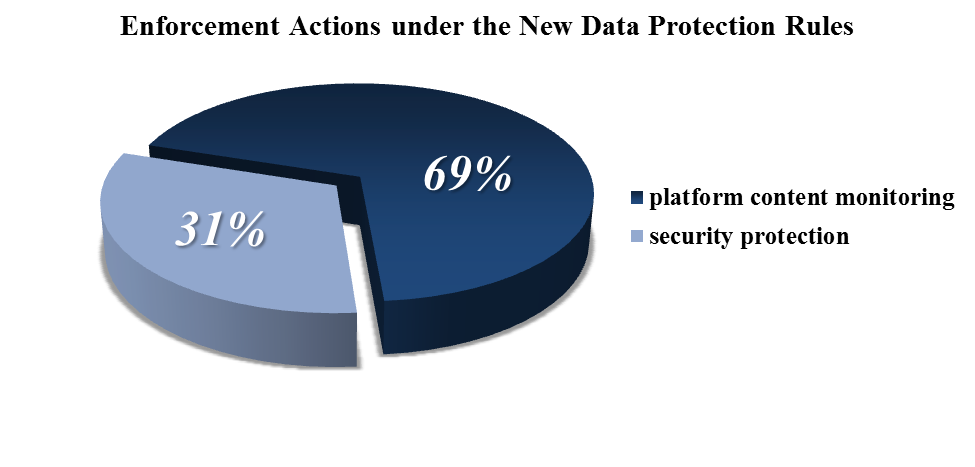

Following the first enforcement actions by local authorities in Shantou and Chongqing for violations of the new Network Security Law that came into effect this year, authorities in China have recently shown a clear initial focus with several new cases targeting provisions of the law that require monitoring of platform content. As of the start of October 2017, enforcement actions by authorities in China have targeted platform content violations in nearly 70 percent of all actions under the new provisions of the data protection rules.

A smaller but notable percentage of the actions have also targeted violations related to security failures under the “graded protection system monitoring” requirements, which include issues such as failing to resolve security vulnerabilities, not saving users’ login information, or connecting to known problematic websites. Other enforcement actions related to this field have targeted types of crimes that existed before the Network Security Law, such as illegally providing or obtaining personal information. However, few enforcement actions have specifically focused on or included many of the parts of the new data protection rules that have grabbed headlines for the past year, especially the data localization rules, cross-border transfer restrictions and security assessments.

Instead, platform content violations have been the clear leader as the subject of enforcement, making up 11 out of the 16 recently publically announced actions. These violations primarily target failures of companies to monitor and delete information published by users that violate rules related to political speech, pornography, fraud, personal information or similar types of restricted content. For example, in September, the Communication Authority in Guangdong punished an IT company because the company failed to stop false and illegal information from spreading on its platform. The company had detected some users spreading “illegal and hazardous” information on its Lizhi FM app, a podcasting platform, however, the company did not take measures to prevent that practice, nor keep relevant records for reporting to the authorities, leading to the violation of platform content monitoring obligations under the Network Security Law.

Mirroring and leading up to this enforcement trend, the Office of the Central Leading Group for Cyberspace Affairs has recently released several detailed provisions focusing on platform content monitoring, including the following:

- Provisions on the Administration of Internet News Information Services

- Administrative Provisions on Internet Live-Streaming Services

- Administrative Provisions on Internet Forum Community Services

- Administrative Provisions on Comment Posting Services on the Internet

- Administrative Provisions on User Account Names on the Internet

- Administrative Provisions on Official Account Information Services

- Administrative Provisions on Group Information Services

These new rules and the more detailed guidance on this issue, coupled with the significant increase in enforcement actions, have caught many companies by surprise and led to a sudden rush for platform content monitoring. A notable recent posting by a leading technology company advertised a sudden recruitment drive for 1,000 people to manually monitor content, while other companies are turning to special rewards for users to self-monitor peers, and still others are promising to build AI systems for automatic detection and removal. This challenge has been particularly difficult for companies that may have built content platforms incidentally, in nontraditional ways often designed to engage customers through social media and user groups, and which now find themselves responsible for the daunting task of monitoring and controlling customer content.

A practical first step for companies is to identify existing platforms, especially those that may not have been designed as a platform but have grown into it: your exercise company’s app allowing users to post their workout statistics and share their progress, your mobile game that added a chat feature for users to coordinate attacks with their friends or your public customer outreach Q&A page might have added just enough functionality to qualify as a platform under these rules. With the platforms identified, the focus of monitoring and control can be fairly clearly obtained from an analysis of the law and regulations, but the key challenge remains implementation of the content controls—a struggle frequently in the news recently for several jurisdictions and large tech companies around the world, and which is still in search of an effective and scalable solution.